About six years ago, I stumbled across some papers in the field of evolutionary

biology that eventually led me to question many of my most basic assumptions

about software development. What started as a bit of a crazy thought,

eventually began to offer explanations for everything from the Lean Startup

movement and T-shaped skills

to the popularity of microservices and why everyone uses Javascript even

though Haskell is the objectively superior language

<trollface>.

The papers introduced me to the the concept biologists call “degeneracy” and its impact on the evolvability of a species. It’s taken me many years to realize that degeneracy is a common thread linking many of the best practices of software development, while simultaneously calling into question many of our rituals and even the basic paradigm of software as an exercise of “engineering”.

Degeneracy: What is It?

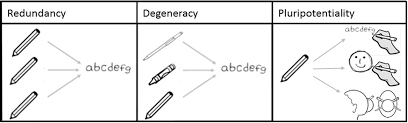

Biologists use the word degeneracy to describe structurally dissimilar components which can perform similar functions. It is related to redundancy, which describes structurally similar components which perform similar functions. Context is a key differentiator. Redundancy describes things which behave similarly regardless of context. Degeneracy exists when one component could—in certain situations—“sub in” for another, but usually fulfills another role.

Image from Hidden in plain view, degeneracy in complex systems

Image from Hidden in plain view, degeneracy in complex systems

If you’ve ever deployed a cluster of servers, you understand the value of redundancy; it’s double the cost, but half the risk. The CFO might raise an eyebrow at the AWS bill when you replicate the entire architecture in two different regions, but the CEO will probably appreciate the peace of mind this redundancy brings. In our industry, we understand redundancy well, but we’re clueless about the value of degeneracy. This essay is the start of an attempt to change that.

Why Degeneracy?

Some degree of a priori suspiciousness of degeneracy makes sense. It’s hard to imagine how it would benefit a business or software system. It sounds both more expensive and more complex than redundancy. Let’s examine these assumptions with some “straw man” examples.

Consider a static HTML web site served via Apache on a single server. Here are two ways we could introduce degeneracy:

- Install Apache, Nginx and Lighttpd on the same server, each on a different port, all serving the same HTML content, and each activating depending on the “context” (the port is the context here).

- Take a second server which is used for some other purpose (how about Microsoft Exchange) and stick a copy of the web site on there running via IIS. This time the “context” is the IP address. The same “function” (serving the HTML) can be accomplished via two different mechanisms depending on the context.

I’m pretty sure nobody has ever tried #1. It’s cheaper than two redundant servers, but it’s far less robust. A single hardware failure could bring down the whole thing. The second option fares mildly better in this regard; it eliminates the single point of failure and adds some flexibility. But it just feels wrong. What if the original server fails and we end up juggling mail and web server load on the same machine? How do we keep the content in sync across operating systems? Sounds incredibly inefficient, right?

It took some time for me to realize that my bias towards “efficient” design might be getting in the way of understanding the value of degeneracy. You may have that same bias.

Degeneracy and Redundancy in Nature

Nature has plentiful examples of both redundancy and degeneracy. Your ears, eyes, and even kidneys are examples of redundant systems. Your body’s nutritional needs exhibit degeneracy. Protein is essential to all of your body’s tissues. Thankfully, there are a plethora of foods you can eat to acquire it; ask a vegan. Can you imagine if you only had only one “route” to acquire protein in your diet?

Next, consider your body’s ability to move. If you injure a muscle, your body will activate surrounding muscles in new ways to sub-in for the injured muscle while it heals. Different structure, same function: degeneracy. Being able to limp away from injuries is obviously valuable as an evolutionary trait.

Immune therapy and vaccines are technologies which work because of degeneracy in immune responses. Because there are multiple pathways to activate your body’s defenses, you can use a relatively harmless stand-in for the real invader to trigger your immune system to prepare.

Degeneracy and Innovation

Just because degeneracy exists in so many places in nature doesn’t necessarily mean we should emulate it. It’s valuable to think about why it exists so that we can benefit from it when appropriate, as we seem to have learned to do with redundancy.

One leading theory about the purpose of degeneracy is that it provides a way for an organism to strike a balance between rigid genetic “robustness” (traits that are extremely consistent across generations) and wild genetic “variance” (traits which are unpredictable between generations). That balance is something geneticists have been trying to understand since Darwin. Ronald Fisher’s Fundamental Theorem of Natural Selection (1930) describes it:

The better adapted you are, the less adaptable you tend to be.

Fisher realized that highly successful species have little incentive to engender experimentation in their offspring; they’ve already got the right “recipe.” On the flip side, the offspring of a poorly adapted species benefit from even random genetic variation. If you’re at the bottom, everywhere else is up.

That’s not the interesting part, though. The insight in Fisher’s Theorem is that success across generations is what really matters, and the most long-lived species find a way to survive through lots of environmental variation.

This idea is easily generalizable to your career, your company, or your code. It makes obvious, intuitive sense: if you over-optimize to today’s challenges, you’re less prepared for tomorrow’s.

Ever had an excellent spec, perfectly-built code and 100% test coverage and have the product manager tell you the customer changed their mind? I’m going to guess you had to throw all or most of that code away.

Ever worked in a startup with a messy codebase everyone agrees has poor architecture? In that startup, did the PM ever come up with an off-the-wall idea—and instead of resistance, the team said, “actually… we just need to tweak the X and bolt-on a Y, and we could do that pretty easily. It’ll be ugly, but we can do it.”

That first example is the anti-pattern Fisher is talking about. That second example: unintentional degeneracy. Succeeding because of “bad” architecture, not in spite of bad architecture.

For decades, geneticists wondered what forces would help organisms find the balancing point that Fisher described. They knew there had to be more to the story than mutation and natural selection. Mutations are too random to lead to the relative stability we see in the natural world. And natural selection produces far fewer hyper-dominant species than we’d assume. Reality is much more moderate. Researchers are beginning to point to degeneracy as the answer:

From observing how evolvability scaled with system size, we concluded that degeneracy not only contributes to the discovery of new innovations but that it may be a precondition of evolvability.”

— James Whitacre, Theoretical Biology and Medical Modeling, 2010

Degeneracy works because the future isn’t entirely random, it’s informed and influenced by the present. Instead of having your offspring make random guesses about future needs, it’s better to evolve multiple, flexible, semi-incomplete solutions to today’s problems, which can be potentially re-composed to solve slightly different problems tomorrow.

Nature Succeeds By Failing

Much to the chagrin of people like me who suffer from seasonal allergies, our immune systems are not finely-tuned machines. They seem to be designed to be somewhat inaccurate; many different inputs will trigger the same immune response. This inaccuracy allows us to “adapt” our immune responses within a single generation (i.e. in real time).

When a virus or bacteria (both of which have much shorter generational cycles than humans do) modifies its means of attack, as long as that variation is within the scope of our immune system’s degeneracy, we will be able to fight it. Consider an alternative approach which relied entirely on variation and natural selection. Each human would have a random set of immunities to specific kinds of viruses. The “lucky” folks who happened to be born with the right ones would thrive, and others would fall victim to the more rapidly-evolving attackers. Each human generation would be a crap shoot, and the viruses would always win. By being “inefficient” and “inaccurate” and having a relatively general definition of “intruder” existing in its code, your immune system is able to defend against previously unseen invaders and seemingly “learn”, even without changing anything about its structure or design. Evolution never goes back to the drawing board.

Degeneracy in Code

As I mentioned, I’ve spent many years ruminating on the ways biological robustness, degeneracy and evolvability relate to software. I’ll be writing much, much more about this in the weeks to come, but let me close this essay with some notes on what I think are three of the biggest implications for our field:

- Think Smaller

- DRY is not a Virtue

- Events Not Things

Think Smaller

Although we observe degeneracy at every level in nature from genetic encodings to human communication, it never appears to be “designed in” from the outset. It is a property that emerges organically from the interactions of very small building blocks.

The neurons in your brain are exceptionally simple. They only become powerful in coordination. Individually, they have narrow responsibilities, and what Fisher would describe as a fairly high degree of adaptation. At micro scale, being superadapted carries few of the problems it does at larger scale. The smaller you make your scope, the lower the probability it will change fundamentally over time.

Much of the success of the microservices movement has to do with the “micro” part. Especially when the services are built by isolated teams without a central architect, you’re likely to end up with a many small parts each highly adapted to a very narrow scope rather than a highly adapted whole. This is a good thing™️. The net result is composable and recombinable in ways that are likely to be more adaptable and better suited for innovation.

The most successful microservices teams I know have so fully embraced degeneracy (even if they don’t call it that), that they not only give each service its own database, but happily duplicate portions of functionality inside other services to avoid being depedent on them.

DRY is Not a Virtue

The value of degeneracy makes clear that strict adherance to the DRY Principle is wrongheaded. Looking back over my career, some of my worst architectural mistakes were places where I “dried up” the code too much, and was left with a lifeless husk too brittle to change.

Not all duplication is bad, and code that your IDE would say is duplicative might in fact describe two subtlely different contexts. Martin Fowler’s Three Strikes and You Refactor captures the necessary subtlety much better.

I’ll write more on this later, so for now, let’s leave it at this: DRY is not a standalone virtue. If the only reason you’re making a change is to remove duplication, stop. Try to think about things which will be harder to change independently as a result of your removal of the duplication. Being able to change pieces independently is fundamentally what evolutionary architecture is all about.

When Andy Hunt and Dave Thomas coined the term in The Pragmatic Programmer, they were talking about specific cases where the duplicate code represented ideas that change exactly in sync. They say the danger is that you’ll change one and forget to change the others. That’s pretty rare in my experience, and I think we’ve found ourselves with a cure that’s worse than the disease.

Events Not Things

Research in degeneracy makes clear that the structure of things is far less important than their function. The structures of evolvable things are constantly changing; that’s literally what adaptation is. The functions which are being performed (acquiring protein, locomotion, etc) are much more persistent over time. So why do we spend so much time talking about structures (which need to constantly change), and so little time talking about functions (which rarely do)? The answer, I suspect, is in the question. It’s interesting to talk about things which are new. It’s boring to talk about things which aren’t.

The implication for better software is to focus your coding efforts on the behaviors that need performing, not the things which perform them. Make your software more boring. “Events Not Things” is about avoiding the trap of thinking of your software like an “engine” (a closed system with predictable inputs and outputs), and think of it more like a garden (an open system which we can influence but can’t really control).

For the analytically-minded folks in our field, this is an extraordinarily foreign concept. We fancy ourselves “engineers”, but for a civil engineer designing a bridge, structure is almost everything. Buildings and bridges are the wrong metaphors for a software system which needs to respond to a rapidly changing world.

Your <User> class is never, ever going to be a perfect representation of reality. The

very idea of a “User” is time and context dependent. In version 1.0 of Facebook,

“User” meant “College Student,” but the “event” of registering for an account was

nearly identical to what it is now. So instead of spending time making the perfect

User class, focus architecture on the Events which modify it. Let “User” be the

amorphous, constantly changing thing it is in reality and don’t impose encoding some

idealized Platonic version of it in your code.

Further Reading

More than 2,000+ words in and I feel like I’ve just scratched the surface here. If you’re interested in hearing more, I’m giving a talk called Evolutionary Systems for Software at OSCON in July 2019.

- Degeneracy and complexity in biological systems, Proceedings of the National Academy of Sciences of the United States of America, 2001, Gerald Edelman & Joseph A. Gally

- Hidden in plain view: degeneracy in complex systems, Biosystems February 2015, P.H. Mason, J.F. Dominguez, B. Winter, A. Grignolio

- Sloppiness, robustness, and evolvability in systems biology, Current Opinion in Biotechnology, Bryan C. Daniels, Yan-Jiun Chen, James P Sethna, Ryan N Gutenkunst, Christopher R. Myers

- Pervasive Flexibility in Living Technologies through Degeneracy-Based Design, Whitacre and Bender

- Degeneracy and networked buffering: principles for supporting emergent evolvability in agile manufacturing systems, Natural Computing September 2012, Regina Frei, James Whitacre

This blog post originated from a talk I gave at the 7CTOs 0111 Conference in 2018 called What Can Evolutionary Biology Teach Us About Our Architecture?